What is Present Day Theoretical Chemistry About?

Structure

theory

For information about researchers posted after Oct. 1, 2009, please go to this link; it covers electronic structure, molecular

dynamics, and statistical mechanics.

A. Electronic structure theory describes the motions of the electrons and produces energy surfaces

The shapes and geometries of molecules, their electronic,

vibrational, and rotational energy levels and wavefunctions, as well

as the interactions of these states with electromagnetic fields lie

within the realm of structure theory.

In the Born-Oppenheimer

model of molecular structure, it is assumed that the electrons move

so quickly that they can adjust their motions essentially

instantaneously with respect to any movements of the heavier and

slower moving atomic nuclei. This assumption motivates us to view the

electrons moving in electronic wave functions (orbitals within the

simplest and most commonly employed theories) that smoothly "ride"

the molecule's atomic framework. These electronic functions are found

by solving a Schrödinger equation whose Hamiltonian

He contains the kinetic energy Te of the

electrons, the Coulomb repulsions among all the molecule's electrons

Vee, the Coulomb attractions Ven among the

electrons and all of the molecule's nuclei treated with these nuclei

held clamped, and the Coulomb repulsions Vnn among all of

these nuclei. The electronic wave functions yk

and energies Ek that result from solving the electronic

Schrödinger equation

He yk = Ek yk

thus depend on the locations {Qi} at which the nuclei are sitting. That is, the Ek and yk are parametric functions of the coordinates of the nuclei.

These electronic energies' dependence on the positions of the

atomic centers cause them to be referred to as electronic energy

surfaces such as that depicted below for a diatomic molecule where

the energy depends only on one interatomic distance R.

For non-linear polyatomic molecules having N atoms, the energy

surfaces depend on 3N-6 internal coordinates and thus can be very

difficult to visualize. A slice through such a surface (i.e., a plot

of the energy as a function of two out of 3N-6 coordinates) is shown

below and various features of such a surface are detailed.

The Born-Oppenheimer theory of molecular structure is soundly based in that it can be derived from a starting point consisting of a Schrödinger equation describing the kinetic energies of all electrons and of all N nuclei plus the Coulomb potential energies of interaction among all electrons and nuclei. By expanding the wavefunction Y that is an eigenfunction of this full Schrödinger equation in the complete set of functions { yk } and then neglecting all terms that involve derivatives of any yk with respect to the nuclear positions {Qi }, one can separate variables such that:

1. The electronic wavefunctions and energies must obeyHe yk = Ek yk

2. The nuclear motion (i.e., vibration/rotation) wavefunctions must obey

(TN + Ek) ck,L = Ek,L ck,L ,

where TN is the kinetic energy operator for movement of

all nuclei.

That is, each and every electronic energy state, labeled k, has a set, labeled L, of vibration/rotation energy levels Ek,L and wavefunctions ck,L .

Because the Born-Oppenheimer model is obtained from the full Schrödinger equation by making approximations (e.g., neglecting certain terms that are called non-adiabatic coupling terms), it is not exact. Thus, in certain circumstances it becomes necessary to correct the predictions of the Born-Oppenheimer theory (i.e., by including the effects of the neglected non-adiabatic coupling terms using perturbation theory).

For example, when developing a theoretical model to interpret the rate at which electrons are ejected from rotationally/vibrationally hot NH- ions, we had to consider coupling between two states having the same total energy:

1. 2P NH- in its v=1 vibrational level and in a high rotational level (e.g., J >30) prepared by laser excitation of vibrationally "cold" NH- in v=0 having high J (due to natural Boltzmann populations); see the figure below, and

2. 3S NH neutral plus an ejected electron in which the NH is in its v=0 vibrational level (no higher level is energetically accessible) and in various rotational levels (labeled N).

Because NH has an electron affinity of 0.4 eV, the total energies

of the above two states can be equal only if the kinetic energy KE

carried away by the ejected electron obeys:

KE = Evib/rot (NH- (v=1, J)) - Evib/rot (NH (v=0, N)) - 0.4 eV.

In the absence of any non-adiabatic coupling terms, these two

isoenergetic states would not be coupled and no electron detachment

would occur. It is only by the anion converting some of its

vibration/rotation energy and angular momentum into electronic energy

that the electron that occupies a bound N2p orbital in

NH- can gain enough energy to be ejected.

Energies of NH- and of NH pertinent to the autodetachment of v=1, J levels of NH- formed by laser excitation of v=0 J'' NH- .

My own research efforts have, for many years, involved studying negative molecular ions (a field in which Professor Ken Jordan is a leading figure) taking into account such non Born-Oppenheimer couplings, especially in cases where vibration/rotation energy transferred to electronic motions causes electron detachment as in the NH- case detailed above.

|

|

|

|

|

My good friend, Professor Yngve Öhrn, has been active in attempting to avoid making the Born-Oppeheimer approximation and, instead, treating the dynamical motions of the nuclei and electrons simultaneously. Professor David Yarkony has contributed much to the recent treatment of non-adiabatic (i.e., non Born-Oppenheimer) effects and to the inclusion of spin-orbit coupling in such studies.

|

|

|

|

|

|

|

The knowledge gained via structure theory is great. The electronic

energies Ek (Q) allow one to determine (see my book

Energetic Principles of Chemical

Reactions) the geometries and relative energies of various

isomers that a molecule can assume by finding those geometries

{Qi ) at which the energy surface Ek has minima

![]() Ek

/

Ek

/![]() Qi

= 0, with all directions having positive curvature (this is

monitored by considering the so-called Hessian matrix

Qi

= 0, with all directions having positive curvature (this is

monitored by considering the so-called Hessian matrix ![]() if none of its eigenvalues are negative, all directions have positive

curvature). Such geometries describe stable isomers, and the energy

at each such isomer geometry gives the relative energy of that

isomer. Professor Berny

Schlegel at Wayne State has been one of the leading figures

whose efforts are devoted to using gradient and Hessian information

to locate stable structures and transition states. Professor

Peter Pulay has

done as much as anyone to develop the theory that allows us to

compute the gradients and Hessians appropriate to the most commonly

used electronic structure methods. His group has also pioneered the

development of so-called local correlation methods which focus on

using localized orbitals to compute correlation energies in a manner

that scales less severely with system size than when delocalized

canonical molecular orbitals are employed.

if none of its eigenvalues are negative, all directions have positive

curvature). Such geometries describe stable isomers, and the energy

at each such isomer geometry gives the relative energy of that

isomer. Professor Berny

Schlegel at Wayne State has been one of the leading figures

whose efforts are devoted to using gradient and Hessian information

to locate stable structures and transition states. Professor

Peter Pulay has

done as much as anyone to develop the theory that allows us to

compute the gradients and Hessians appropriate to the most commonly

used electronic structure methods. His group has also pioneered the

development of so-called local correlation methods which focus on

using localized orbitals to compute correlation energies in a manner

that scales less severely with system size than when delocalized

canonical molecular orbitals are employed.

|

|

|

|

|

|

At any geometry {Qi }, the gradient or slope vector

having components ![]() Ek

/

Ek

/![]() Qi

provides the forces (Fi = -

Qi

provides the forces (Fi = - ![]() Ek

/

Ek

/![]() Qi

) along each of the coordinates Qi . These forces

are used in molecular dynamics simulations (see the following

section) which solve the Newton F = m a equations and

in molecular mechanics studies which are aimed at locating those

geometries where the F vector vanishes (i.e., the stable

isomers and transition states discussed above).

Qi

) along each of the coordinates Qi . These forces

are used in molecular dynamics simulations (see the following

section) which solve the Newton F = m a equations and

in molecular mechanics studies which are aimed at locating those

geometries where the F vector vanishes (i.e., the stable

isomers and transition states discussed above).

Also produced in electronic structure simulations are the

electronic wavefunctions {yk }

and energies {Ek} of each of the electronic states. The

separation in energies can be used to make predictions about the

spectroscopy of the system. The wavefunctions can be used to evaluate

properties of the system that depend on the spatial distribution of

the electrons in the system. For example, the z- component of the

dipole moment of a molecule mz

can be computed by integrating the probability density for

finding an electron at position r multiplied by the z-

coordinate of the electron and the electron's charge e: mz

= Ú e yk*

yk z dr . The average kinetic energy of an

electron can also be computed by carrying out such an average-value

integral: Ú

yk* (-

h2 /2me ![]() 2

) yk dr. The rules

for computing the average value of any physical observable are

developed and illustrated in popular

undergraduate text books on physical chemistry (e.g., Atkins

text or Berry, Rice, and

Ross) and in graduate level texts (e.g., Levine,

McQuarrie, Simons and

Nichols).

2

) yk dr. The rules

for computing the average value of any physical observable are

developed and illustrated in popular

undergraduate text books on physical chemistry (e.g., Atkins

text or Berry, Rice, and

Ross) and in graduate level texts (e.g., Levine,

McQuarrie, Simons and

Nichols).

|

|

|

|

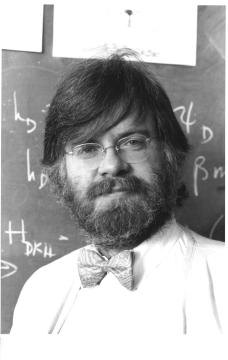

one of the most broad-based members of the theory community |

|

Prof. Stuart Rice, University of Chicago. He has done as much as anyone in a tremendous variety of theoretical studies including studies of interfaces and using coherent external perturbations to control chemical processes.

Not only can electronic wavefunctions tell us about the average

values of all physical properties for any particular state (i.e.,

yk above), but they also allow

us to tell how a specific "perturbation" (e.g., an electric field in

the Stark effect, a magnetic field in the Zeeman effect, light's

electromagnetic fields in spectroscopy) can alter the specific state

of interest. For example, the perturbation arising from the electric

field of a photon interacting with the electrons in a molecule is

given within the so-called electric dipole approximation (see, for

example, Simons and Nichols, Ch.

14) by:

Hpert =Sj e2 rj • E (t)

where E is the electric field

vector of the light, which depends on time t in an oscillatory

manner, and ri is the vector denoting the spatial

coordinates of the ith electron. This perturbation,

Hpert acting on an electronic state yk

can induce transitions to other states yk'

with probabilities that are proportional to the square of the

integral

Ú yk'* Hpert yk dr .

So, if this integral were to vanish, transitions between

yk and

yk' would not occur, and

would be referred to as "forbidden". Whether such integrals vanish or

not often is determined by symmetry. For example, if yk

were of odd symmetry under a plane of symmetry sv

of the molecule, while yk' were

even under sv , then the

integral would vanish unless one or more of the three cartesian

components of the dot product rj • E were odd under

sv The general idea is that for

the integral to not vanish the direct product of the symmetries of

yk and of yk'

must match the symmetry of at least one of the symmetry components

present in Hpert . Professor Poul

Jørgensen has been especially involved in developing

such so-called response theories for perturbations that may be time

dependent (e.g., as in the interaction of light's electromagnetic

radiation).

As a preamble to the following discussion, I wish to draw the

readers' attention to the web pages of several prominent theoretical

chemists who have made major contributions to the development and

applications of electronic structure theory and who have agreed to

allow me to cite them in this text. In addition to the people

specifically mentioned in this text for whom I have already provided

web links, I encourage you to look at the following web pages for

further information:

|

Professor Ernest Davidson, Univ. of Washington and Indiana University has contributed as much as anyone both to the development of the fundamentals of electronic structure theory and its applications to many perplexing problems in molecular structure and spectroscopy. |

|

The late Professor John Pople, Northwestern University, made developments leading to the suite of Gaussian computer codes that now constitute the most widely used electronic structure computer programs.His contributions to theory were recognized in his sharing the 1998 Nobel Prize in Chemistry. |

|

|

Professor Bill Goddard, Cal Tech. When most quantum chemists were pursuing improvements in the molecular orbital method, he returned to the valence bond theory and developed the so-called GVB methods that allow electron correlation to be included within a valence bond framework. |

|

Professor Fritz Schaefer, University of Georgia. He has carried out as many applications of modern electronic structure theory to important chemical problems as anyone. |

|

|

Professor Rod Bartlett, University of Florida. He brought the coupled-cluster method, developed earlier by others, into the mainstream of electronic structure theory. |

Professor Bernd Heb, University of Erlangen, has a special focus in his research on relativistic effects and the development of tools needed to study them.

Professor Hans-Joachim Werner, University of Stuttgart, has for many years pioneered developments of multi-configurational SCF and coupled-cluster methods and applied them to a wide variety of chemical problems.

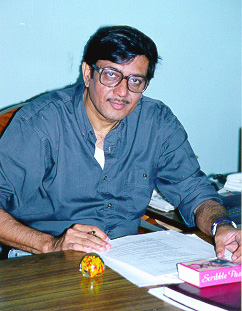

Professor David Bishop, University of Ottawa, says the following about his recent research efforts:

Precise calculations of electrical and magnetic properties of small molecules and atoms; nonlinear optical properties, Faraday effect, Kerr effect, Cotton-Mouton effect, second- and third-harmonic generation; the effect of electric fields on nuclear motion.

Professor David Bishop, University of Ottawa

Professor Sigrid Peyerimhoff, Bonn University, is one of the earliest pioneers of multi-reference configuration interaction methods and has applied these powerful tools to many important chemical species and reactions. She has prepared a wonderful web site that details much of the history of the development of quantum chemistry.

|

The late Professor Mike Zerner, University of Florida, was very influential in continuing the development of semi-empirical methods within quantum chemistry. Such methods can be applied to much larger molecules than ab initio methods, so their continued evolution is an essential component to growth in this field of theoretical chemistry. |

|

Professor Poul Jørgensen of Aarhus University

spent much of his early career developing the fundamentals of electron and polarization propagator theory. Following up on that early work, he moved on to develop the tools of response theory (time dependent and time independent) for computing a wide variety of molecular properties. His group has combined the power of response theory with several powerful wavefunctions including coupled-cluster and configuration interaction functions.

The full N-electron Schrödinger equation governing the

movement of the electrons in a molecule is

[-h2 /2me Si=1i2 - Sa Si Za e2 /ria + Si,j e2 /rij ] y = E y .

In this equation, i and j label the electrons and a labels the nuclei. Even at the time this material was written, this equation had been solved only for the case N=1 (i.e., for H, He+ , Li2+ , Be3+ , etc.). What makes the problem difficult to solve for other cases is the fact that the Coulomb potential e2/rij acting between pairs of electrons depends upon the coordinates of the two electrons ri and rj in a way that does not allow the separations of variables to be used to decompose this single 3N dimensional second-order differential equation into N separate 3-dimensional equations.

However, by approximating the full electron-electron

Coulomb potential Si,j e2

/rij by a sum of terms, each depending on the

coordinates of only one electron Si,

V(ri ), one arrives at a Schrödinger equation

[-h2 /2me Si=1i2 - Sa Si Za e2 /ria + Si V(ri)] y = E y

which is separable. That is, by assuming that

y (r1 , r2 , ... rN ) = f1 (r1 ) f2 (r2) ... fN (rN),

and inserting this ansatz into the approximate Schrödinger

equation, one obtains N separate Schrödinger equations:

[-h2 /2mei2 - Sa Za e2 /ria + V(ri)] fi = Ei fi

one for each of the N so-called orbitals fi whose energies Ei are called orbital energies.

It turns out that much of the effort going on in the electronic

structure area of theoretical chemistry has to do with how one can

find the "best" effective potential V(r); that is, the

V(r), which depends only on the coordinates r of one

electron, that can best approximate the true pairwise additive

Coulomb potential experienced by an electron due to the other

electrons. The simplest and most commonly used approximation for

V(r) is the so-called Hartree-Fock (HF) potential:

V(r) fi (r) = Sj [ Ú|fj (r')|2 e2 /|r-r'| dr' fi (r)

- Úfj*(r') fj (r') e2 /|r-r'| dr' fj (r) ].

This potential, when acting on the orbital fi

, can be viewed as multiplying fi

by a sum of potential energy terms (which is what makes it

one-electron additive), each of which consists of two parts:

a. An average Coulomb repulsion Ú

|fj (r')|2

e2 /|r-r'| dr' between the electron in

fi with another electron whose

spatial charge distribution is given by the probability of finding

this electron at location r' if it resides in orbital

fj : |fj

(r')|2 .

b. A so-called exchange interaction between the electron in

fi with the other electron that

resides in fj..

The sum shown above runs over all of the orbitals that are occupied in the atom or molecule.

For example, in a Carbon atom, the indices i and j run over the two 1s orbitals, the two 2s orbitals and the two 2p orbitals that have electrons in them (say 2px and 2py ) The potential felt by one of the 2s orbitals is obtained by setting fi = 2s, and summing j over j=1s, 1s, 2s, 2s, 2px , 2py . The term Ú |1s(r')|2 e2 /|r-r'| dr' 2s(r) gives the average Coulomb repulsion between an electron in the 2s orbital and one of the two 1s electrons; Ú |2px(r')|2 e2 /|r-r'| dr' 2s(r) gives the average repulsion between the electron in the 2px orbital and an electron in the 2s orbital; and Ú |2s(r')|2 e2 /|r-r'| dr' 2s(r) describes the Coulomb repulsion between one electron in the 2s orbital and the other electron in the 2s orbital. The exchange interactions, which arise because electrons are Fermion particles whose indistinguishability must be accounted for, have analogous interpretations.

For example, Ú 1s*(r') 2s(r') e2 /|r-r'| dr' 1s(r) is the exchange interaction between an electron in the 1s orbital and the 2s electron; Ú 2px*(r') 2s(r') e2 /|r-r'| dr' 2px(r) is the exchange interaction between an electron in the 2px orbital and the 2s electron; and Ú 2s*(r') 2s(r') e2 /|r-r'| dr' 2s(r) is the exchange interaction between a 2s orbital and itself (note that this interaction exactly cancels the corresponding Coulomb repulsion Ú |2s(r')|2 e2 /|r-r'| dr' 2s(r), so one electron does not repel itself in the Hartree-Fock model).

There are two primary deficiencies with the Hartree-Fock

approximation:

a. Even if the electrons were perfectly described by a wavefunction

y (r1 ,

r2 , ... rN ) = f1

(r1 ) f2

(r2) ... fN

(rN) in which each electron occupied an

independent orbital and remained in that orbital for all time, the

true interactions among the electrons Si,j

e2 /rij are not perfectly represented by

the sum of the average interactions.

b. The electrons in a real atom or molecule do not exist in

regions of space (this is what orbitals describe) for all time; there

are times during which they must move away from the regions of space

they occupy most of the time in order to avoid collisions with other

electrons. For this reason, we say that the motions of the electrons

are correlated (i.e., where one electron is at one instant of time

depends on where the other electrons are at that same time).

Let us consider the implications of each of these two

deficiencies.

To examine the difference between the true Coulomb repulsion between electrons and the Hartree-Fock potential between these same electrons, the figure shown below is useful. In this figure, which pertains to two 1s electrons in a Be atom, the nucleus is at the origin, and one of the electrons is placed at a distance from the nucleus equal to the maximum of the 1s orbital's radial probability density (near 0.13 Å). The radial coordinate of the second is plotted along the abscissa; this second electron is arbitrarily constrained to lie on the line connecting the nucleus and the first electron (along this direction, the inter-electronic interactions are largest). On the ordinate, there are two quantities plotted: (i) the Hartree-Fock (sometimes called the self-consistent field (SCF) potential) Ú |1s(r')|2 e2 /|r-r'| dr', and (ii) the so-called fluctuation potential (F), which is the true coulombic e2/|r-r'| interaction potential minus the SCF potential.

As a function of the inter-electron distance, the fluctuation

potential decays to zero more rapidly than does the SCF potential.

However, the magnitude of F is quite large and remains so over an

appreciable range of inter-electron distances. Hence, corrections to

the HF-SCF picture are quite large when measured in kcal/mole. For

example, the differences DE between the

true (state-of-the-art quantum chemical calculation) energies of

interaction among the four electrons in Be (a

and b denote the spin states of the

electrons) and the HF estimates of these interactions are given in

the table shown below in eV (1 eV = 23.06 kcal/mole).

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

These errors inherent to the HF model must be compared to the total (kinetic plus potential) energies for the Be electrons. The average value of the kinetic energy plus the Coulomb attraction to the Be nucleus plus the HF interaction potential for one of the 2s orbitals of Be with the three remaining electrons -15.4 eV; the corresponding value for the 1s orbital is (negative and) of even larger magnitude. The HF average Coulomb interaction between the two 2s orbitals of 1s22s2 Be is 5.95 eV. This data clearly shows that corrections to the HF model represent significant fractions of the inter-electron interaction energies (e.g., 1.234 eV compared to 5.95- 1.234 = 4.72 eV for the two 2s electrons of Be), and that the inter-electron interaction energies, in turn, constitute significant fractions of the total energy of each orbital (e.g., 5.95 -1.234 eV = 4.72 eV out of -15.4 eV for a 2s orbital of Be).

The task of describing the electronic states of atoms and molecules from first principles and in a chemically accurate manner (± 1 kcal/mole) is clearly quite formidable. The HF potential takes care of "most" of the interactions among the N electrons (which interact via long-range coulomb forces and whose dynamics requires the application of quantum physics and permutational symmetry). However, the residual fluctuation potential is large enough to cause significant corrections to the HF picture. This, in turn, necessitates the use of more sophisticated and computationally taxing techniques to reach the desired chemical accuracy.

What about the second deficiency of the HF orbital-based model? Electrons in atoms and molecules undergo dynamical motions in which their coulomb repulsions cause them to "avoid" one another at every instant of time, not only in the average-repulsion manner that the mean-field models embody. The inclusion of instantaneous spatial correlations among electrons is necessary to achieve a more accurate description of atomic and molecular electronic structure.

Some idea of how large the effects of electron correlation are and

how difficult they are to treat using even the most up-to-date

quantum chemistry computer codes was given above. Another way to see

the problem is offered in the figure shown below. Here we have

displayed on the ordinate, for Helium's 1S

(1s2) state, the probability of finding an electron whose

distance from the He nucleus is 0.13Å (the peak of the 1s

orbital's density) and whose angular coordinate relative to that of

the other electron is plotted on the absissa. The He nucleus is at

the origin and the second electron also has a radial coordinate of

0.13 Å. As the relative angular coordinate varies away from

0 deg, the electrons move apart; near 0

deg, the electrons approach one another. Since both electrons have

the same spin in this state, their mutual Coulomb repulsion alone

acts to keep them apart.

What this graph shows is that, for a highly accurate wavefunction

(one constructed using so-called Hylleraas functions that depend

explicitly on the coordinates of the two electrons as well as on

their interparticle distance coordinate) that is not of the simple

orbital product type, one finds a "cusp" in the probability density

for finding one electron in the neighborhood of another electron with

the same spin. The probability plot for the Hylleraas function is the

lower dark line in the above figure. In contrast, this same

probability density, when evaluated for an orbital-product

wavefunction (e.g., for the Hartree-Fock function) has no such cusp

because the probability density for finding one electron at r,

q, f is

independent of where the other electron is (due to the product nature

of the wavefunction). The Hartree-Fock probability, which is not even

displayed above, would thus, if plotted, be flat as a function of the

angle shown above. Finally, the graph shown above that lies above the

Hylleraas plot and that has no "sharp" cusp was extracted from a

configuration interaction wavefunction for He obtained using a rather

large correlation consistent polarized valence quadruple atomic basis

set. Even for such a sophisticated wavefunction (of the type used in

many state of the art ab initio calculations), the cusp in the

relative probability distribution is clearly not well

represented.

Although highly accurate methods do exist for handling the correlated motions of electrons (e.g., the Hylleraas method mentioned above), they have not proven to be sufficiently computationally practical to be of use on atoms and molecules containing more than a few electrons. Hence, it is common to find other methods employed in most chemical studies in which so-called correlated wavefunctions are used.

By far, the most common and widely used class of such

wavefunctions involve using linear combinations of orbital product

functions (actually, one must use so-called antisymmetrized orbital

products to properly account for the fact that Fermion wavefunctions

such as those describing electrons are odd under permutations of the

electrons' labels):

Y = S J CJ| fJ1 fJ2 fJ3 ...fJ(N-!) fJN |,

with the indices J1, J2, ..., JN labeling the spin-orbitals and

the coefficients CJ telling how much of each particular

orbital product to include. As an example, one could use

Y = C1 |1sa 1sb | - C2 [|2pza2pzb | - |2pxa2pxb | -2pya2pyb |]

as a wavefunction for the 1S state of He (the last three orbital products are combined to produce a state that is spherically symmetric and thus has L = 0 electronic angular momentum just as the |1sa1sb| state does).

Using a little algebra, and employing the fact that the orbital

products

|f1 f2 | = (2)-1/2 [ f1 f2 - f2 f1 ]

are really antisymmetric products, one can show that the above He

wavefunction can be rewritten as follows:

Y = C1/3 {|fz a f'z b| - |fx a f'xb| - |fya f'y b| },

where fz = 1s +

(3C2/C1)1/2 2pz and

f'z = 1s -

(3C2/C1)1/2 2pz , with

analogous definitions for fx ,

f'x , fy

, and f'y . The physical

interpretation of the three terms ({|fz

a f'z

b| , |fx

a f'x

b| , and |fy

a f'y

b| ) is that |fz

a f'z

b| describes a contribution to

Y in which one electron of a

spin resides in a region of space described by fz

while the other electron of b spin

is in a region of space described by f'z

, and analogously for |fxa

f'x b|

and |fy a

f'y b|.

Such a wavefunction thus allows the two electrons to occupy different

regions of space since each orbital f in a

pair is different from its partner f'. The

extent to which the orbital differ depends on the

C2/C1 ratio which, in turn, is governed by how

strong the mutual repulsions between the two electrons are. Such a

pair of so-called polarized orbitals is shown in the figure

below.

These approaches provide alternatives to the conventional tools of quantum chemistry. The CI, MCSCF, MPPT/MBPT, and CC methods move beyond the single-configuration picture by adding to the wave function more configurations whose amplitudes they each determine in their own way. This can lead to a very large number of CSFs in the correlated wave function, and, as a result, a need for extraordinary computer resources.

The density functional approaches are different. Here one solves a

set of orbital-level equations

[ -h2/2me2- SA ZAe2/|r-RA| + Úr(r')e2/|r-r'|dr'

+ U(r)] fi = ei fi

in which the orbitals {fi} 'feel' potentials due to the nuclear centers (having charges ZA), Coulombic interaction with the total electron density r(r'), and a so-called exchange-correlation potential denoted U(r'). The particular electronic state for which the calculation is being performed is specified by forming a corresponding density r(r'). Before going further in describing how DFT calculations are carried out, let us examine the origins underlying this theory.

The so-called Hohenberg-Kohn theorem

states that the ground-state electron density r(r)

describing an N-electron system uniquely determines the potential

V(r) in the Hamiltonian

H = Sj {-h2/2mej2 + V(rj) + e2/2 Skj 1/rj,k },

and, because H determines the ground-state energy and wave

function of the system, the ground-state density r(r)

determines the ground-state properties of the system. The proof of

this theorem proceeds as follows:

a. r(r) determines N because Ú r(r) d3r = N.

b. Assume that there are two distinct potentials (aside from an additive constant that simply shifts the zero of total energy) V(r) and V'(r) which, when used in H and H', respectively, to solve for a ground state produce E0, Y (r) and E0', Y'(r) that have the same one-electron density: Ú |Y|2 dr2 dr3 ... drN = r(r)= Ú |Y'|2 dr2 dr3 ... drN .

c. If we think of Y' as trial variational wave function for the Hamiltonian H, we know that

E0 < <Y'|H|Y'> = <Y'|H'|Y'> + Ú r(r) [V(r) - V'(r)] d3r = E0' + Ú r(r) [V(r) - V'(r)] d3r.

d. Similarly, taking Y as a trial function for the H' Hamiltonian, one finds that

E0' < E0 + Ú r(r) [V'(r) - V(r)] d3r.

e. Adding the equations in c and d gives

E0 + E0' < E0 + E0',

a clear contradiction.

Hence, there cannot be two distinct potentials V and V' that give the same ground-state r(r). So, the ground-state density r(r) uniquely determines N and V, and thus H, and therefore Y and E0. Furthermore, because Y determines all properties of the ground state, then r(r), in principle, determines all such properties. This means that even the kinetic energy and the electron-electron interaction energy of the ground-state are determined by r(r). It is easy to see that Ú r(r) V(r) d3r = V[r] gives the average value of the electron-nuclear (plus any additional one-electron additive potential) interaction in terms of the ground-state density r(r), but how are the kinetic energy T[r] and the electron-electron interaction Vee[r] energy expressed in terms of r?

The main difficulty with DFT is that the Hohenberg-Kohn theorem shows that the ground-state values of T, Vee , V, etc. are all unique functionals of the ground-state r (i.e., that they can, in principle, be determined once r is given), but it does not tell us what these functional relations are.

To see how it might make sense that a property such as the kinetic energy, whose operator

-h2 /2me ![]() 2

involves derivatives, can be related to the electron density,

consider a simple system of N non-interacting electrons moving in a

three-dimensional cubic "box" potential. The energy states of such

electrons are known to be

2

involves derivatives, can be related to the electron density,

consider a simple system of N non-interacting electrons moving in a

three-dimensional cubic "box" potential. The energy states of such

electrons are known to be

E = (h2/2meL2) (nx2 + ny2 +nz2 ),

where L is the length of the box along the three axes, and nx

, ny , and nz are the quantum numbers

describing the state. We can view nx2 +

ny2 +nz2 = R2

as defining the squared radius of a sphere in three dimensions, and

we realize that the density of quantum states in this space is one

state per unit volume in the nx , ny , nz

space. Because nx , ny , and nz

must be positive integers, the volume covering all states with

energy less than or equal to a specified energy E =

(h2/2meL2) R2 is 1/8 the

volume of the sphere of radius R:

F(E) = 1/8 (4p/3) R3 = (p/6) (8meL2E/h2)3/2 .

Since there is one state per unit of such volume, F(E)

is also the number of states with energy less than or equal to E, and

is called the integrated density of states. The number of

states g(E) dE with energy between E and E+dE, the density of

states, is the derivative of F:

g(E) = dF/dE = (p/4) (8meL2/h2)3/2 E1/2 .

If we calculate the total energy for N electrons, with the states

having energies up to the so-called Fermi energy (i.e., the

energy of the highest occupied molecular orbital HOMO) doubly

occupied, we obtain the ground-state energy:

= (8p/5) (2me/h2)3/2 L3 EF5/2.

The total number of electrons N can be expressed as

N = 2g(e)dE= (8p/3) (2me/h2)3/2 L3 EF3/2,

which can be solved for EF in terms of N to then

express E0 in terms of N instead of EF:

E0 = (3h2/10me) (3/8p)2/3 L3 (N/L3)5/3 .

This gives the total energy, which is also the kinetic energy in this case because the potential energy is zero within the "box", in terms of the electron density r (x,y,z) = (N/L3). It therefore may be plausible to express kinetic energies in terms of electron densities r(r), but it is by no means clear how to do so for "real" atoms and molecules with electron-nuclear and electron-electron interactions operative.

In one of the earliest DFT models, the Thomas-Fermi theory,

the kinetic energy of an atom or molecule is approximated using the

above kind of treatment on a "local" level. That is, for each volume

element in r space, one assumes the expression given above to

be valid, and then one integrates over all r to compute the

total kinetic energy:

TTF[r] = Ú (3h2/10me) (3/8p)2/3 [r(r)]5/3 d3r = CF Ú [r(r)]5/3 d3r ,

where the last equality simply defines the CF constant

(which is 2.8712 in atomic units). Ignoring the correlation and

exchange contributions to the total energy, this T is combined with

the electron-nuclear V and Coulombic electron-electron potential

energies to give the Thomas-Fermi total energy:

E0,TF [r] = CF Ú [r(r)]5/3 d3r + Ú V(r) r(r) d3r + e2/2 Ú r(r) r(r')/|r-r'| d3r d3r',

This expression is an example of how E0 is given as a local density functional approximation (LDA). The term local means that the energy is given as a functional (i.e., a function of r) which depends only on r(r) at points in space but not on r(r) at more than one point in space.

Unfortunately, the Thomas-Fermi energy functional does not produce results that are of sufficiently high accuracy to be of great use in chemistry. What is missing in this theory are a. the exchange energy and b. the correlation energy; moreover, the kinetic energy is treated only in the approximate manner described.

In the book by Parr and Yang, it is

shown how Dirac was able to address the exchange energy for the

'uniform electron gas' (N Coulomb interacting electrons moving

in a uniform positive background charge whose magnitude balances the

charge of the N electrons). If the exact expression for the exchange

energy of the uniform electron gas is applied on a local level, one

obtains the commonly used Dirac local density approximation to the

exchange energy:

Eex,Dirac[r] = - Cx Ú [r(r)]4/3 d3r,

with Cx = (3/4) (3/p)1/3 = 0.7386 in atomic units. Adding this exchange energy to the Thomas-Fermi total energy E0,TF [r] gives the so-called Thomas-Fermi-Dirac (TFD) energy functional.

|

|

|

|

|

|

|

Because electron densities vary rather strongly spatially near the

nuclei, corrections to the above approximations to T[r]

and Eex.Dirac are needed. One of the more commonly used

so-called gradient-corrected approximations is that invented

by Becke, and referred to as the

Becke88 exchange functional:

Eex(Becke88) = Eex,Dirac[r] -g Ú x2 r4/3 (1+6 g x sinh-1(x))-1 dr,

where x =r-4/3

|![]() r|,

and g is a parameter chosen so that the

above exchange energy can best reproduce the known exchange energies

of specific electronic states of the inert gas atoms (Becke finds

g to equal 0.0042). A common gradient

correction to the earlier T[r] is

called the Weizsacker correction and is given by

r|,

and g is a parameter chosen so that the

above exchange energy can best reproduce the known exchange energies

of specific electronic states of the inert gas atoms (Becke finds

g to equal 0.0042). A common gradient

correction to the earlier T[r] is

called the Weizsacker correction and is given by

dTWeizsacker = (1/72)(h/me) Ú |r(r)|2/r(r) dr.

Although the above discussion suggests how one might compute the

ground-state energy once the ground-state density r(r)

is given, one still needs to know how to obtain r.

Kohn and Sham (KS) introduced a set of so-called KS orbitals obeying

the following equation:

{-1/22 + V(r) + e2/2 Ú r(r')/|r-r'| dr' + Uxc(r) }fj = ej fj ,

where the so-called exchange-correlation potential Uxc

(r) = dExc[r]/dr(r)

could be obtained by functional differentiation if the

exchange-correlation energy functional

Exc[r] were known. KS

also showed that the KS orbitals {fj}

could be used to compute the density r by

simply adding up the orbital densities multiplied by orbital

occupancies nj :

r(r) = Sj nj |fj(r)|2.

(here nj =0,1, or 2 is the occupation number of the orbital

fj in the state being studied)

and that the kinetic energy should be calculated as

T = Sj nj <fj(r)|-1/22 |fj(r)>.

The same investigations of the idealized 'uniform electron gas'

that identified the Dirac exchange functional, found that the

correlation energy (per electron) could also be written exactly as a

function of the electron density r

of the system, but only in two limiting cases- the high-density limit

(large r) and the low-density limit. There

still exists no exact expression for the correlation energy even for

the uniform electron gas that is valid at arbitrary values of

r. Therefore, much work has been devoted

to creating efficient and accurate interpolation formulas connecting

the low- and high- density uniform electron gas expressions (see

Appendix E in the Parr and Wang book for further details). One such

expression is

EC[r] = Ú r(r) ec(r) dr,

where

ec(r) = A/2{ln(x/X) + 2b/Q tan-1(Q/(2x+b)) -bx0/X0 [ln((x-x0)2/X)

+2(b+2x0)/Q tan-1(Q/(2x+b))]

is the correlation energy per electron. Here x =

rs1/2 , X=x2 +bx+c, X0

=x02 +bx0+c and Q=(4c -

b2)1/2, A = 0.0621814, x0=

-0.409286, b = 13.0720, and c = 42.7198. The parameter rs

is how the density r enters since

4/3 prs3 is equal to

1/r; that is, rs is the radius

of a sphere whose volume is the effective volume occupied by one

electron. A reasonable approximation to the full

Exc[r] would contain

the Dirac (and perhaps gradient corrected) exchange functional plus

the above EC[r], but

there are many alternative approximations to the exchange-correlation

energy functional. Currently, many workers are doing their best to

"cook up" functionals for the correlation and exchange energies, but

no one has yet invented functionals that are so reliable that most

workers agree to use them.

To summarize, in implementing any DFT, one usually proceeds as follows:

1. An atomic orbital basis is chosen in terms of which the KS orbitals are to be expanded.

2. Some initial guess is made for the LCAO-KS expansion coefficients Cj,a: fj = Sa Cj,a ca.

3. The density is computed as r(r) = Sj nj |fj(r)|2 . Often, r(r) is expanded in an atomic orbital basis, which need not be the same as the basis used for the fj, and the expansion coefficients of r are computed in terms of those of the fj . It is also common to use an atomic orbital basis to expand r1/3(r) which, together with r, is needed to evaluate the exchange-correlation functional's contribution to E0.

4. The current iteration's density is used in the KS equations to determine the Hamiltonian

{-1/2 ![]() 2

+ V(r) + e2/2 Ú

r(r')/|r-r'|

dr' + Uxc(r) }whose "new" eigenfunctions

{fj} and eigenvalues

{ej} are found by solving the

KS equations.

2

+ V(r) + e2/2 Ú

r(r')/|r-r'|

dr' + Uxc(r) }whose "new" eigenfunctions

{fj} and eigenvalues

{ej} are found by solving the

KS equations.

5. These new fj are used to compute a new density, which, in turn, is used to solve a new set of KS equations. This process is continued until convergence is reached (i.e., until the fj used to determine the current iteration's r are the same fj that arise as solutions on the next iteration.

6. Once the converged r(r) is

determined, the energy can be computed using the earlier

expression

E [r] = Sj nj <fj(r)|-1/22 |fj(r)>+ Ú V(r) r(r) dr + e2/2 Ú r(r)r(r')/|r-r'|dr dr'+ Exc[r].

In closing this section, it should once again be emphasized that

this area is currently undergoing explosive growth and much scrutiny.

As a result, it is nearly certain that many of the specific

functionals discussed above will be replaced in the near future by

improved and more rigorously justified versions. It is also likely

that extensions of DFT to excited states (many workers are actively

pursuing this) will be placed on more solid ground and made

applicable to molecular systems. Because the computational effort

involved in these approaches scales much less strongly with basis set

size than for conventional (SCF, MCSCF, CI, etc.) methods, density

functional methods offer great promise and are likely to contribute

much to quantum chemistry in the next decade.

There is a nice DFT web site established by the Arias research group at Cornell devoted to a DFT project involving highly efficient computer implementation within object-oriented programming.

The development of electronic structure theory has been ongoing since the 1940s. At first, only a few scientists had access to computers, and they began to develop numerical methods for solving the requisite equations (e.g., the Hartree-Fock equations for orbitals and orbital energies, the configuration interaction equations for electronic state energies and wavefunctions). By the late 1960s, several research groups had developed reasonably efficient computer codes (written primarily in Fortran with selected subroutines that needed to run especially efficiently in machine language), and the explosive expansion of this discipline was underway. By the 1980s and through the 1990s, these electronic structure programs began to be used by practicing "bench chemists" both because they became easier to use and because their efficiency and the computers' speed grew (and cost dropped) to the point at which modest to large molecules could be studied at reasonable cost and effort.

Even with much faster computers, there remain severe bottlenecks to extending ab initio quantum chemistry tools to larger and larger molecules (and to extended systems such as polymers, solids, and surfaces). Two of the most difficult issues involve the two-electron integrals

(ci cj |1/r1,2| ck cl ). Nearly all correlated electronic structure methods express the electronic energy E (as well as its gradient and second derivative or Hessian) in terms of integrals taken over the molecular orbitals, not the basis atomic orbitals. This usually then requires that the integrals be first evaluated in terms of the basis orbitals and subsequently transformed from the basis orbital to the molecular orbital representation using the LCAO-MO expansion fj = Sa Cj,a ca. For example, one such step in the transformation involves computing

Sa Cj,a (ca cj |1/r1,2| ck cl ) = (fi cj |1/r1,2| ck cl ).

Four such one-index transformations must performed to eventually obtain the (fi fj |1/r1,2| fk fl ) integrals. Given a set of M basis orbitals, there are ca. M4/8 integrals (ca cj |1/r1,2| ck cl ). Each one-index transformation step requires ca. M5 calculations (i.e., to form the sum of products such as Sa Cj,a (ca cj |1/r1,2| ck cl ). Hence the task of forming these integrals over the molecular orbitals scales as the fifth power of M.

The research group of Professor Martin Head-Gordon has been attacking two aspects of the above integral bottleneck.

First, his group has been deriving and implementing in a very efficient manner expressions for the electronic energy (and its gradient with respect to nuclear positions ) that are not written in terms of integrals over the molecular orbitals but in terms of integrals over the basis atomic orbitals. This allows them to produce what are called "direct" procedures for evaluating energies and gradients. The advantages of such direct methods are (1) that one does not have to go through the M5 integral transformation process, and (2) that one does not have to first compute all of the atomic-orbital integrals (ci cj |1/r1,2| ck cl ). Instead, one can compute groups of these integrals (ci cj |1/r1,2| ck cl ) (e.g., as many as one can retain within the fast main memory of the computer), calculate the contributions made by these integrals to the energy or gradient, and then delete this group of integrals and proceed to compute (and use) another group of such integrals. This allows one to handle larger basis sets than when one has to first obtain all of the integrals and store them (e.g., on disk). The second major advance that the Head-Gordon group has fostered is developing clever and efficient new tools for computing the atomic-orbital-level two-electron integrals (ci cj |1/r1,2| ck cl ), especially when the product functions ci (1) cj (1) and ck (2) cl (2) invlove functions that are distant from one another. When there is good reason to view these products as residing in different regions of space (e.g., when the constitutent atomic orbitals are centered on atoms in different parts of a large molecule), so-called multipole expansion methods (and other tools) can be used to approximate the two-electron integrals (ci cj |1/r1,2| ck cl ). In this way, the Head-Gordon group has been able to (a) calculate integrals in which all of the basis orbitals reside on the same or very nearby atoms in conventional (highly efficient) ways, (b) approximate (very rapidly and in a numerically reliable multipolar manner) the integrals where the charge densities are somewhat distant yet still significant, and (c) ignore (to controlled tolerances) integrals for product densities that are even more distant. This has allowed them to obtain integral evaluation and energy-computation schemes that display nearly linear scaling with the number of atoms (and thus basis orbitals) in the system. It is only through such efforts that there is any hope of extending ab initio electronic structure mehtods to large molecules and extended systems.

The Head-Gordon group has also been expanding the horizons of the very powerful coupled-cluster method for treating electron correlation at a high level, especially by introducing so-called local-methods for handling interactions among electrons. In particular, by making clever defintions of localized occupied and virtual orbitals, they have been able to develop new methods whose computational effort promises to scale more practically with the number of electrons (i.e., the molecule size) than do conventional coupled cluster methods. Combining their advances in coupled-cluster theory with their breakthroughs in handling electron-electron interactions, has lead to a large body of important new work from this outstanding group.

At present, more electronic structure calculations are performed by non-theorists than by practicing theoretical chemists. This is largely due to the proliferation of widely used computer programs. This does not mean that all that needs to be done in electronic structure theory is done. The rates at which improvements are being made in the numerical algorithms used to solve the problems as well as at which new models are being created remain as high as ever. For example, Professor Rich Friesner has developed and Professor Emily Carter has implemented for correlated methods a highly efficient way to replace the list of two-electron integrals (fi fj |1/r1,2| fk fl ), which number N4 , where N is the number of atomic orbital basis functions, by a much smaller list (fi fj |l) from which the original integrals can be rewritten as: (fi fj |1/r1,2| fk fl ) = Sg (fi (g)fj (g)) Ú dr fk(r) fl (r)/|r-g| .

|

|

|

|

|

|

|

This tool, which they call pseudospectral methods, promises to reduce the CPU, memory, and disk storage requirements for many electronic structure calculations, thus permitting their applications to much larger molecular systems.

In addition to ongoing developments in the underlying theory and computer implementation, the range of phenomena and the kinds of physical properties that one needs electronic structure theory to address is growing rapidly. Professor Gustavo Scuseria has been especially active in developing new methods for treating very large molecules, in particular, methods whose computer requirements scale linearaly (or nearly so) with molecular size.

In addition, a great deal of progress has been made in constructing sequences of atomic orbital basis sets whose use allows one to extrapolate to essentially complete-basis quality results. Thom Dunning has, more than anyone else, been responsible for progress in this area.

|

|

|

|

|

|

There are a variety of tools that aim to compute differences betweeen state energies (e.g., electron affinities, ionization potentials, and excitation energies) directly. Several workers who have been instrumental in developing these methods include those shown below.

Professors Lorenz Cederbaum (l), University of Heidelberg, Professor Jan Linderberg (r), Aarhus University, and Professor Yngve Ohrn (below), University of Florida.

Also, Professor Howard Taylor, University of Southern California (below) contributed much to these developments as well as to methods for treating metastable electronic states.

In more recent years, the author, Professor J. V. Ortiz (below, l), Kansas State University, Professor P. Jørgensen (below, r), and Profesor J. Oddershede (bottom) have continued to develop these and related methods.

In addition to the many practicing quantum chemists introduced above, I show below photos of several others whose research and educational efforts will be of interest to students reading this web site.

|

|

|

|

|

Professor Marcel Nooijen, University of Waterloo

At Yale University, Prof. Victor Batista is working on using quantum and semi-classical methods on many-molecule condensed-phase systems both in equilibrium and undergoing dynamical evolution. These systems inlcude biological species such as rhodopsin and semi-conductor materials.

Professor Victior S. Batista, Yale University

Professor Roi Baer, Hebrew University

Professor Baer is involved in a wide range of research at the interface between electronic structure and dynamics. He has a very nice web page devoted not only to his own group's efforts but detailing work of many other scholars.

Professor Krzysztof Szalewicz, Deleware

Professor Szalewicz has used so-called symmetry adapted perturbation theory (SAPT) to develop very accurate methods for evaluating weak intermolecular interactions. His group has also shown how to use such ideas to improve upon density functional theory by adding to DFT the effects of dispersion interactions which DFT usually leaves out.

Professor Millard Alexander, Maryland

Professor Alexander's group has contributed much to the study of the spectra, structure, and reactivity of molecular radicals, especially as influenced in collisions with other species.

Professor Kirk Peterson, Washington State University

Professor Peterson has been very active in developing systematic basis sets for use when employing correlated electronic structure methods. His web site offers a link to a wide range of such basis sets that can be downloaded in very convenient forms.

Professor John Herbert, Ohio State University

Professor John Herbert of Ohio State University is working on combined electronic and nuclear-motion dynamical studies of water cluster anions. His group is also involved in advancing the theoretical framework of photochemistry and developing novel and practical methods within electronic structure theory.

Professor Seymour Wang, Boston University

Professor Wang works on multi-scale Monte-Carlo simulations, the development of new force fields using force matching methods.

Professor Giulia Galli, Univ. of California, Davis

Professor Giulia Galli heads the ab initio nanoscience group at the Univ. of California, Davis.

Professor Sabre Kais, Purdue University

Professor Ramon Hernandez, Centro de Investigaciones Quimicas, Mexico

Professor Hernandez studies the effects of van der Waals complex formation or of bi-molecular collisions on the spectra and energetics and energy transfer within open-shell molecules.

Professor Kais describes his research as follows: The research in our group is mainly devoted to electronic structure and dynamics of atoms, molecules, clusters and quantum dots. The research program includes developing and applying the renormalization group, finite-size scaling and the pivot method.

Professor Alexei Stuchebrukhov, University of California, Davis

He describes his research as follows: We are interested in two fundamental processes in chemistry and biology: electron transfer and proton transfer. In our research we develop theory and perform large-scale computer simulations of these processes in complex biological molecular systems.

In addition to Prof. Balusubramanian, another expert on the effects of relativity on atomic and molecular properties is Prof. Pekka Pyykko of Helsinki University (below). This dynamic scholar always give a wonderful talk on how relativity contributes to many properties of matter in nature.

Professor Maciej Gutowski, Hariot-Watt University, Scotland

Professor Gutowski has been involved in a wide range of electronic stucture issues including dipole-bound electrons, electrons attaching to biological molecules, and the theory of intermolecular interactions.

Most people know that the study of transition metal containing systems is especially difficult because of the near-degeneracy of the ns and (n-1) d orbitals and the role of relativistic effects in the heavier elements. Prof. Gernot Frenking of the University of Marburg, shown below, has devoted a great deal of effort to understanding such systems. His group has also developed a powerful and useful means of decomposing the bonding interactions among atoms into various physical contributions.

Professor Gernot Frenking

Professor Piotr Piecuch, Michigan State University, has been involved in extending the coupled-cluster method to allow one to use multiconfigurational reference wave functions, which is very important when one wishes to describe diradicals and bond-breaking and bond-forming proceses. He and his co-workers have forumlated what they call a renormalized coupled-cluster method that can accurately describe bond breaking and excited electronic surfaces at a computational cost similar to that of a single-configuration reference calculation. They have also been looking into using explicitly correlated two-electron exponential cluster expansions of the N-electron wave function to see to what extent one can capture most (if not all) of the electron-electron correlations within such a compact framework.

Professor Piotr Piecuch (upper left) with his research group at Michigan State Univesity

Professor Joe Francisco, Purdue University

Professor Francisco says of his research: Research in our laboratory focuses on basic studies in spectroscopy, kinetics and photochemistry of novel transient species in the gas phase.

Professor Nicholas Handy (above), Cambridge University, has made numerous contributions to electronic structure theory, to the theory of molecular spectroscopy, and to the rapidly expanding field of density functional theory.

Professor Jose Ramon Alvarez Collado (above) has made several contributions to Hartree-Fock and configuration interaction theory as well as to the treatment of vibtrational Hamiltonia and vibrational motions of molecules. He recently has shown how to handle large clusters or solid materials that contain a very large number of unpaired electrons.

Professor Andrew Rappe, University of Pennsylvania

Professor Rappe says of his research: My research group creates and uses new theoretical and computational approaches to study complex systems in materials science, condensed-matter physics, and physical chemistry.

Professor Thomas Sommerfeld, Southeast Louisina University

Professor Sommerfeld studies electron-driven chemistry, including electrons bound to water clusters and the metastable anion states that often arise when electrons bind weakly to molecules or molecular clusters.

|

|

|

|

|

|

|

Professor Bjørn Roos, Lund University, Sweden has been one of his nation's leading quantum chemists for many years, has developed one of the most powerful and widely used quantum chemistry codes, and has organized many schools on quantum chemistry.

Professor Jerzy Leszczynski at Jackson State University has established a very strong program in quantum chemistry and has hosted many very important conferences as well as "schools".

Professor Kimihiko Hirao's group in Tokyo has made many important contributions to the development and applications of modern quantum chemistry tools, especially those involving multi-configurational wave functions.

There exists an approach to solving the Schrödinger equation that has proven to be extremely accurate and is based on viewing the time-dependent Schrödinger equaiton as a diffusion equation with its time variable defined as imaginary. The idea then is to propagate an "initial" wavefunction (chosen to possess the proper permutational and symmetry properties of the desired solution) forward in time with the diffusion equation (having a source and sink term arising from the electron-nuclear and electron-electron Coulomb potentials). It can be shown that such a propogated wavefunction will converge to the lowest energy state that has the symmetry and nodal behavior of the trial wavefunction. The people who have done the most to propose, implement, and improve such so-called Diffusion Monte-Carlo type procedures include:

Professor Bill Lester, University of California, Berkeley

Professor Jules Moskowitz of New York University

and Professor David Ceperley of the University of Illinois

Professor Greg Gellene, Texas Tech, has pioneered the study of Rydberg species and of concerted reactions of small molecules and molcular compexes.

Professor Anna Krylov, University of Southern California, has been developing new electronic structure methods aimed at particularly difficult classes of compounds where multiconfigurational wave functions are essential. These include diradical and triradical species.

Professor Debbie Evans, University of New Mexico, has been working on electron transport and other quantum dynamics in branched macromolecules and other condensed phase systems.

Professor Angela Wilson, University of North Texas has done a lot to calibrate basis sets so we know to what extent we can trust them in various kinds of electronic structure calculations.

Prof. Angela Wilson

Professor Thomas Cundari, University of North Texas, is very active in using electronic structure methods to study inorganic and organometallic species.

Prof. Thomas Cundari (red shirt).

Professor Wes Borden recently joined the University of North Texas as Welch Professor. He has a long and distinguished record of applying quantum chemistry to important problems in organic chemistry.

Prof. Wes Borden

Professor Ludwik Adamowicz (below) of the University of Arizona has done a lot of work on molecular anions, especially

dipole-bound anions involving bio-molecules. He has also done much work on multi-reference coupled cluster methods,

method for generating non-adiabatic multiparticle wave functions, and for calculating rovibrational states of polyatomic molecules.

Many younger theoretical chemists are focusing efforts on simulating and understanding processes occurring in condensed media. One of the bright young stars in this direction is Professor Oleg Prezhdo at the University of Wisconsin in Seattle. The goal of Professor Prezhdo's research is to obtain a theoretical understanding at the molecular level of chemical reactivity and energy transfer in complex condensed-phase chemical and biological environments. This requires the development of new theoretical and computational tools and the application of these tools to challenging chemical problems in direct connection to experiments.

Professor Oleg Prezhdo, University of Washington (standing left at top)

Professor Kwang Kim, is using a variety of theoretical methods to study functional materials with the support of Creative Research Initiativ,

Ministry of Science and Technology of Korea. His laboratory has three subdivisions: (1) the quantum theoretical chemistry

group, (2) a theoretical condensed matter physics group, and (3)a synthesis and property measurement group.

Indiana University has had a long tradition of excellence in theoretical chemistry. Currently, its chemistry faculty include Prof. Peter Ortoleva, Prof. Krishnan Raghavachari, and Prof. Srinivasan Iyengar who are shown below.

Professor Peter Ortoleva who works on pattern formations within biological systems as well as in geology.

Professor Krishnan Raghavachari who has made numerous advances in quantum chemical methodologies and in the study of small to moderate size clusters of main group atoms.

Prof. Srinivasan Iyengar who developed the atom-centered density matrix propogation method for combining electronic structure and collision/reaction dynamics and is applying this to a wide variety of problems.

At Notre Dame University, there are also several faculty specializing in theory. They include

Prof. Eli Barkai who studies single-molecule spectroscopy and fractional kinetics.

Prof. Dan Gezelter who studies condensed-phase molecular dynamics, and

Prof. Dan Chipman who is interested in solvation effects, electronic structure methods developments and free radicals.

Several recent new faculty hires have been made in extremely good chemistry departments including those shown below. We need to be looking out for many good new developments from these people.

Prof. Garnet Chan, Cornell University, says the following about his group's work:

Our work is in the area of the electronic structure and dynamics of complex processes. We engage in developing new and more powerful theoretical techniques which enable us to describe strong electronic correlation problems.

Of particular theoretical interest are the construction of fast (polynomial) algorithms to solve the quantum many-particle problem, and the treatment of correlation in time-dependent processes.

A key feature of our theoretical approach is the use of modern renormalization group and multi-scale ideas. These enable us to extend the range of simulation from the simple to the complex, and from the small to the very large.

Some current phenomena under study include:

(i) Energy and electron transfer in conjugated polymers: specifically photosynthetic carotenoids, optoelectronic polymers, and carbon nanotubes,

(ii) Spin couplings in multiple-transition metal systems, including iron-sulfur proteins and molecular magnets.

(iii) Lattice models of high Tc superconductors.

Profesor Phillip Geissler, University of California, Berkeley

Professor Troy van Voorhis, MIT, says the following about his group's work:

The Van Voorhis group develops new methods that make reliable predictions about real systems for which existing techniques are inadequate. At present, our ideas center around the following major themes: the value of explicitly time-dependent theories, the importance of electron correlation and the proper treatment of delicate effects such as van der Waals forces and magnetic interactions.

Professor David Sherrill, Georgia Tech, has a strong research program focused on developing methods for properly describing the homolytic cleavage of chemical bonds and other cases in which more than a single Slater determinant become essential. He is also doing work on photochemistry, highly reactive species, and non-covalent interactions, all of which play central roles in many chemical processes.

Professor David Sherrill, Georgia Tech (above) and below with his group

Sherrill research group as of 2005

Professor Robert Cave, Harvey Mudd College

Professor Cave shows that one can work at a high-quality primarily undergraduate institution and still be a major contributor. He has done some of the best work aimed at describing the mechanisms of electron transfer.

Profesor Jose Gascon, University of Connecticut

Professor Jose Gascon, University of Conecticut, is making use of combined quantum mechanics-molecular mechanics (QM-MM) approaches to study structure-function relations and biochemical reactivity of biomacromolecules.

Professor Ajit Thakkar, University of New Brunswick, has made many contributions to using quantum chemistry to study important chemical problems and has the following to say about his work:

My research concerns predictions of the properties of molecules and interactions between pairs of molecules. Such predictions are made using numerically-intensive computational methods based on quantum mechanics.

Professor Ajit Thakkar

At the University of Alberta, Prof.

P. N. Roy is working on the following:

Formal developments of the Feynman Path centroid approach for systems obeying Bose-Einstein statistics, Path Integral simulations of quantum fluids

* Simulations of doped helium nano-droplets

* Exact Quantum Dynamics of weakly bound clusters

* Molecular Dynamics simulation of Protein-ligand systems in solution and in the gas phase

* Dynamics of hydrogen bonded complexes and proton transfer

* Development of semi-classical quantum dynamics approaches

Professor P. N. Roy, Univ. of Alberta

Also at Georgia Tech is the reserach team of Prof. Rigoberto Hernandez, who focuses on a wide variety of scientific challenges in condensed-phase dynamics including protein folding, polymerization dynamics, and developing Langevin-type dynamics methods for use on non-stationary stochastic dynamical events.

Professor Rigoberto Hernandez, Georgia Tech.

Electronic Structure of Molecular Magnetism

Molecular magnetism is currently a ``hot'' area in chemical physics because of the technological promise of colossal magneto-resistant materials compounds and super-paramagnetic molecules. We are interested in developing a better fundamental understanding of magnetism that will allow us to predict the behavior of systems like these in an ab initio way. For example, one should be able to extract the Heisenberg exchange parameters (even for challenging oxo-bridged transition metal compounds) by simulating the response of the system to localized magnetic fields. We are also interested in extending the commonly used Heisenberg Hamiltonian to include spin orbit interactions in a local manner. This would be useful, for example, if one is interested in assembling a large molecule out of smaller building blocks - by knowing the preferred axis of each fragment one could potentially extract the magnetic axis and anisotropy of the larger compound.

Modeling Real-Time Electron Dynamics

Ab initio methods tend to focus the lion's share of attention on the description of electronic structure. However, there are a variety of systems where a focus on the electron dynamics is extremely fruitful. On the one hand, there are systems where it is the motion of the electrons that is interesting. This is true, for example, in conducting organic polymers and crystals - where it is charge migration that leads conductivity - and in photosynthetic and photovoltaic systems - where excited state energy transfer determines the efficiency. Also, in a very deep way, dynamic simulations can offer improved pictures of static phenomena. Here, our attention is focused on the fluctuation-dissipation theorem, an exact relation between the static correlation function and the time-dependent response of the system, and on semiclassical techniques, which provide a simple ansatz for approximating quantum results using essentially classical information.

Prof. Aaron Dinner, Univ. of Chicago

One of the leading figures in the field of determining small-molecule potential energy surfaces from spectroscopic and dynamics data is Professor Bob LeRoy of the University of Waterloo who says the following about his research efforts:

The determination and implications of intermolecular forces and the spectroscopy and dynamics of small molecules and molecular clusters. The development and application of methods for simulating and analysing photodissociation spectra of small molecules, and the discrete spectra of diatomic molecules and of polyatomic Van der Waals molecules, in order to determine the underlying potential energy surfaces. Simulations and predictions of dynamical properties and their spectroscopic signatures for chromophore molecules in molecular clusters of matrix environments.

Professor Boy LeRoy, University of Wateloo

Professor Dennis Salahub, University of Calgary, has contributed much to the development of modern density functional theory and tells us the following about his work:

His research group has improved Density Functional methods and software, which has helped to extend the range of applications. New improved functionals have been proposed, tested, and implemented in the code suite deMon, developed in Montreal and now in use in dozens of labs around the world. A fusion of DFT-deMon with other techniques (reaction fields, molecular dynamics, etc.) is underway. Current efforts are aimed at describing reactivity in complex environments: transition-metal catalysis, on the one hand, and enzymatic catalysis, on the other.

Professor Dennis Salahub, University of Calgary

Prof. Misha Ovchinnikov, Univ. of Rochester

Professor Jim Wright at Carleton University is carrying out electronic structure theory work on a variety of projects including the following in his words:

In my laboratory we use theoretical calculations to study chemical bonds in a variety of different environments. Part of the research is aimed at developing advances in theoretical methods, which can then be used to study important chemical properties such as the bond dissociation enthalpy, ionization potential, electron and proton affinity, hydrogen bond strengths and transition state energies.

Professor Jim Wright, Carleton University.

Prof. David Mazziotti, Univ. of Chicago

Professor Russell Boyd of Dalhouse University tells us the following about his research contributions, which are many:

Contemporary theoretical methods are used and developed to study a broad range of problems in chemistry, chemical physics and surface chemistry. Many projects involve collaboration with experimentalists and yield information which is unattainable by any other method.

Professor Russell Boyd, Dalhousie University

Professor Tom Ziegler at the University of Calgary has advanced the development and applications of density functional theory to a wide variety of important chemical and materials science problems including:

1. The optimization of transition state structures in elementary reaction steps of importance for homogeneous catalysis. Studies of the corresponding reaction paths by intrinsic reaction coordinates.

2. The dynamics of molecules chemisorped on metal surfaces.

3. The influence of relativity on the chemical bond and the periodicity of the elements.

4. First principle calculations of response properties such as frequencies, NMR and ESR parameters.

5. Modelling of steric bulk and solvation effects in elementary reaction steps.

6. Fundamental studies of density functional theory.

Professor Tom Ziegler, University of Calgary

Web page links to many of the more widely used programs offer convenient access:

Pacific Northwest Labs is developing a suite of programs called NWChem

The Gaussian suite of programs

The GAMESS program

The HyperChem programs of Hypercube, Inc.

The CAChe software packages from Fujitsu

The Spartan sofware package of Wavefunction, Inc.

The MOPAC program of CambridgeSoft

The Amber program of Prof. Peter Kollman, University of California, San Francisco

The CHARMm program

The programs of Accelrys, Inc.

The COLUMBUS program

The CADPAC program of Dr. Roger Amos

The programs of Wavefunction, Inc.

The ACES II program of Prof. Rod Bartlett.

The MOLCAS program of Prof. Bjorn Roos.

The MOLPRO quantum chemistry package of Profs. Werner and Knowles

The Vienna Ab Initio Simulations Package (VASP)

A nice compendium of various softwares is given in the Appendix of Reviews in Lipkowitz K B and Boyd D B (Eds) 1996 Computational Chemistry (New York, NY: VCH Publications) Vol 7